As courts increasingly adopt AI, the know-how of implementing a responsible and ethical framework for its use in courts is crucial to ensure fairness, prevent bias, and maintain public trust

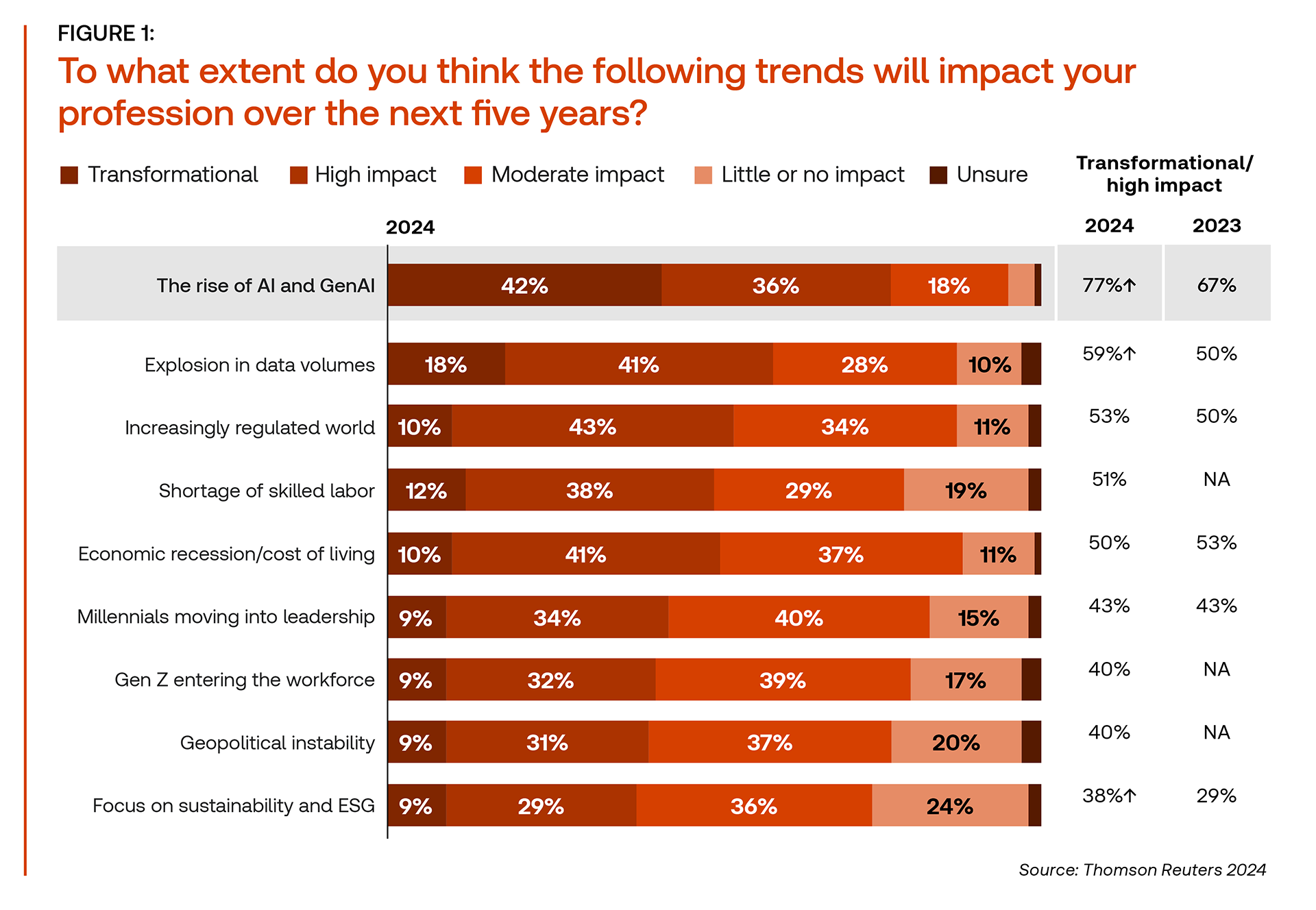

According to the Thomson Reuters Future of Professionals 2024 report, more than three-quarters (77%) of professionals surveyed said they believe that AI will have a high or transformational impact on their work over the next five years.

What is interesting is there has been a shift in sentiment towards AI, with professionals moving from their initial fears around using AI or of AI eliminating legal industry jobs to an increasingly optimistic view of AI as a transformative force in their professions.

Framework for responsible AI

Even though there is a trend of optimism towards AI, using AI responsibly is a critical ingredient for courts in order to take advantage of the opportunities AI brings while mitigating the risks and concerns of individuals. More specifically, the integration of AI into court systems demands a comprehensive ethical framework to ensure justice is served while the public trust is maintained.

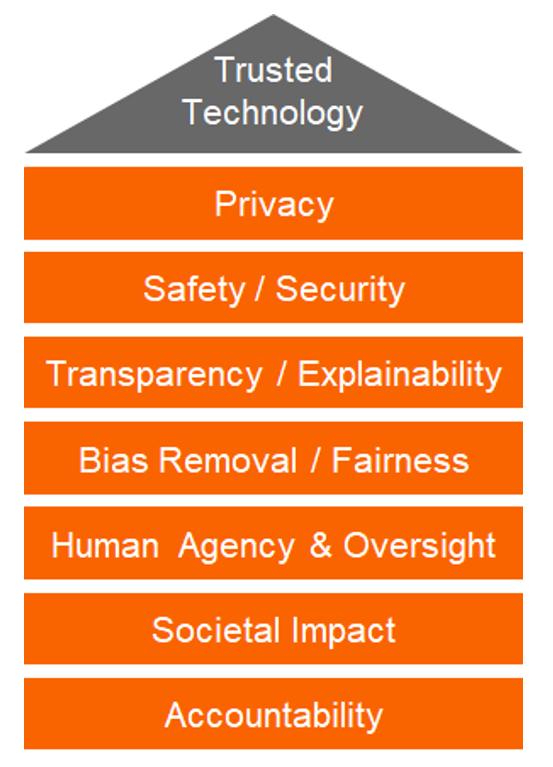

As Jacqueline Lam, Director for Responsible AI Strategic Engagements at Thomson Reuters, emphasized in a recent webinar hosted by the Thomson Reuters Institute (TRI) and the National Center for State Courts (NCSC): “AI systems should be designed and used in a way by courts that promotes fairness and avoids discrimination” based on an ethical AI framework, which includes:

-

-

- Privacy and security that serve as foundational elements, requiring robust systems with proper data protection measures. Courts must implement encryption, secure storage protocols, and establish rigorous access controls to safeguard the highly sensitive information they manage.

- Transparency represents another critical pillar, combined with thorough testing and monitoring of AI tools and continuous communications with the communities it impacts.

- Human oversight stands as perhaps the most crucial element because it ensures that AI augments rather than replaces human judgment. “Human oversight of AI is vital to prevent bias,” particularly in contexts in which decisions impact individuals’ rights and liberties, Lam explains.

- Societal impact means that courts must conduct impact assessments to understand the consequences for their constituents.

-

Assessing risk is a necessary part of any ethical framework

Implementing an ethical framework for AI in the judicial system is crucial to ensure that judges, court administrators, and legal professionals can use technology competently and ethically. To help with this process, the Governance & Ethics working group (created as part of the TRI-NCSC partnership) recently published a white paper that essentially acts as a How-to guide for judges, court staff, and legal professionals as they seek to responsibly use AI in courts.

As the paper makes clear, the central premise of an ethical framework involves understanding the levels of risk — from minimal to unacceptable — associated with AI and then applying these insights to determine appropriate use cases.

Join us for the next TRI-NCSC webinar on April 16 Deepfakes: Evidentiary Issues for State Courts

Assessing risk and classifying the impact into low, moderate, high, and unacceptable categories results in a structured framework based on their application and the context in which they are used. For example, classifying risks through the lens of their impact would necessarily include:

Low-risk applications — This includes predictive text and basic word processing, which require minimal human oversight in which a supervisor intervenes only when necessary.

Moderate-risk applications — These tools include those used for drafting legal opinions and demand more direct human involvement to ensure accuracy and reliability.

High-risk applications — These can significantly impact human rights and necessitate stringent human oversight to prevent errors and biases.

Unacceptable-risk applications — Lastly, the tools with unacceptable risks, such as those automating life-and-death decisions, should be avoided altogether.

However, Dan Linna, Director of Law and Technology Initiatives at Northwestern Pritzker School of Law & McCormick School of Engineering and fellow member of the TRI-NCSC Governance & Ethics working group, cautions that risks need to be monitored no matter what level of applications you are using.

“Even among tools that may seem low impact, there may be in your particular context usages where there could be impacts, errors that could actually create greater harm than may have been recognized early on,” Linna explains. “So, when you’re engaging in these discussions, you should be talking about, ‘Well, how is this tool going to be used? What do we think its accuracy is going to be? And if it makes an error, is it going to be a low impact error?’”

Community engagement is key to responsible AI

The use of AI in courts presents both opportunities and challenges; and understanding these are crucial for responsible implementation. This is why community engagement is critical for maintaining public trust in the judicial system, particularly when implementing high-risk AI solutions.

By involving the community in the decision-making process and being transparent about responsible AI implementation, courts can ensure that the public understands the benefits and risks of AI solutions and can trust the judicial system to use these technologies responsibly.

As Kevin Miller, who is responsible for technology and fundamental rights at Microsoft and also a member of the TRI-NCSC Governance & Ethics working group, explains: “We have an opportunity with use of AI in the courts to be leaders in the criminal justice system around transparency, community engagement, and ensuring that the community is part of the conversation.” Indeed, the ethical concept of transparency reinforces the importance of building and maintaining trust of court constituents, and courts must be open about their use of AI technology, especially in high-risk areas that impact individual liberties.

In addition, responsible implementation of AI helps to avoid other ethical pitfalls, such as:

Overreliance on AI systems — This is one of the significant pitfalls that can lead to a lack of human oversight in critical decision-making areas. “We can’t use these tools as if in a deterministic way,” Linna cautions, adding that users need to realize that any AI-provided answer is simply “the output you got that one time that you tried it with that specific prompt.” Having a human in the loop is important to address the fact that AI tools based on probabilistic models do not always yield consistent results.

Privacy issues — Breaches in privacy is a critical concern for courts. Indeed, sensitive legal data must be protected to maintain public trust and meet ethical obligations.

Presence of biases — Further, AI can amplify existing biases if not carefully managed, leading to unfair outcomes. Therefore, understanding the difference between probabilistic and deterministic tools is essential for judicial professionals to use AI effectively while safeguarding justice and fairness.

Looking ahead at the promise of AI in courts

As the judicial system continues to evolve, AI can help bridge gaps for self-represented litigants, provide language support, and ensure faster case resolutions, all while maintaining the integrity and fairness that underpin the legal system. However, it is crucial for courts to approach AI integration with caution, ensuring that ethical frameworks guide its use in order to prevent bias, protect privacy, and uphold public trust.

“Judges and court administrators should be empowered to use technology competently and consistently with ethical obligations to best serve the public,” says Sara Omundson, Administrative Director of the Courts of the Idaho Supreme Court and TRI-NCSC AI Governance & Ethics working group member. “The checklist provided as part of the white paper is a practical tool that can be used to ensure those who work in the courts are meeting this goal.”

And this also helps to ensure AI is a tool to advance justice while safeguarding the fundamental rights of individuals.

You can find more about how courts are using AI-driven technology here