Effective use of GenAI language models in legal work requires mastering the art of “prompt engineering”, which involves crafting clear, specific, and context-rich instructions to guide the AI and ensure accurate and relevant results

Prompt engineering is the art and science of crafting effective instructions for AI language models. As these models become more sophisticated, the ability to guide them precisely has become a crucial skill.

For some, this new skill can be daunting. “We’ve gone through this before,” says Catherine Reach Sanders, Director at the Center for Practice Management at the North Carolina Bar Association. “If you remember the early days of legal research, we would create these very complex Boolean search structures with parentheticals and proximity to get very specific information that we were looking for.”

Crafting powerful prompts

However, this leaves many judicial staff asking, Where do we start? To answer that, the National Center for State Courts and the Thomson Reuters Institute hosted a recent webinar on prompt engineering with Reach Sanders and Valerie McConnell, Senior Director for Customer Success at Thomson Reuters.

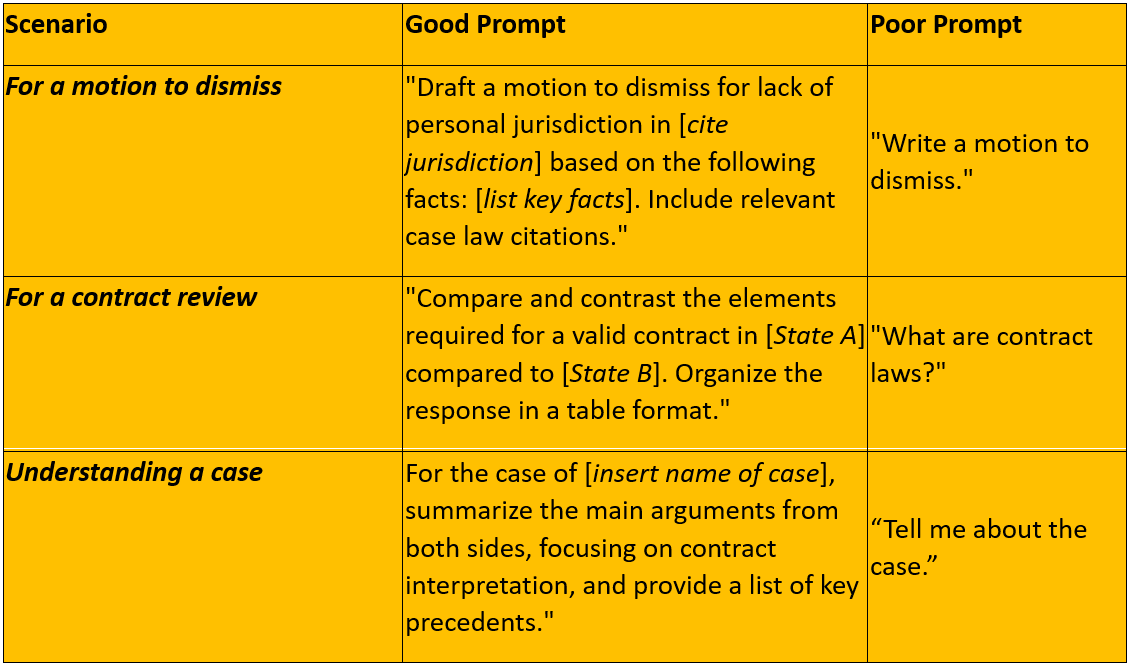

First, clarity, specificity, and providing context are essential in crafting better prompts. A well-defined prompt ensures the AI algorithm understands the task without ambiguity. Generating relevant responses is enhanced by providing background information or specifying the focus area. For example, when asking about a legal scenario, include pertinent case details or legal principles. “Context is key — and also with generalized-use large language models, such as ChatGPT, you want to be specific,” says Reach.

Second, the prompt should state the desired output — and add specifics about what results are expected. Whether you need a bullet-point list, a summary, or a detailed analysis, state this with specificity to help the AI meet your expectations.

Composing compelling prompts for legal work

Effective prompt engineering for legal AI requires tailoring prompts to specific legal tasks while considering ethical and privacy concerns. Using AI that has been designed for legal tasks is important. When the tool is free, “you are the product,” Reach explains. “What you upload is probably subject [to being used for training]. So, you have to be careful.”

When crafting prompts for legal research or analysis, you should provide relevant context such as jurisdiction, area of law, and other key facts. You should also be specific about the desired output format and level of detail. For complex legal work, these additional tips could help:

Use a back-and forth approach — Referred to as iterative refinement, this means starting with a basic prompt, evaluating the AI’s response, then refining and clarifying as needed. You should also ask follow-up questions to probe deeper or to correct any misunderstandings.

For complex legal issues, break them down into smaller sub-prompts. “A huge advantage of large language models is their ability to iterate and refine the discussion over the course of a chat,” says McConnell. “That means that when you’re prompting, you don’t put a lot of pressure on yourself. If you forgot to include something, or you phrase something inaccurately, or you think the AI misunderstood what you said, you’re not stuck.”

Ask the AI how to craft an effective prompt for the legal task — Known as meta-prompting, this technique can be powerful because it leverages the AI’s knowledge of legal concepts and terminology.

Experiment with different phrasings and approaches — What works well for one legal task may not be ideal for another. You should develop prompt templates for common legal workflows in order to improve consistency and efficiency. With practice, your prompt engineering skills will continue to improve.

To put these principles into practice, see the examples below:

Solutions to common challenges

Legal professionals face several challenges when using AI tools, particularly around context limitations, inaccuracies, and verification. The context window of large language models restricts how much information they can process at once. Users should be aware of what their AI tool’s context window limit is, and they should be mindful that, once the context window’s limit is reached, the AI will forget what it has been told previously. If a user encounters this issue, they can remedy it by including the relevant details from earlier in the chat in their next prompt.

AI hallucinations and factual errors are also common issues. This highlights the critical need for human verification of AI-generated information before use in legal applications.

To address these challenges, legal professionals can employ the iterative refinement techniques that have been discussed, asking follow-up questions and providing additional context to improve accuracy. Meta-prompting, as mentioned above, can also help optimize results.

Balancing efficiency with accuracy when using AI for certain legal tasks remains an ongoing challenge. While AI can rapidly analyze documents and generate summaries, the human-in-the-loop still is essential for validating outputs. Legal professionals must develop skills in prompt engineering and critical evaluation of AI-generated content to better leverage these tools effectively while maintaining high standards of accuracy and ethics in legal work.

For more on this subject, register for the next upcoming webinar, AI in Action: Current Applications in State Courts, in the TRI/NCSC AI Policy Consortium series.