Government agencies are looking for Gen AI adaptations that will suit their particular needs, and over the next 18 months even they will likely see advances in their use of AI to better serve the public

Since ChatGPT was released to the public in late-2022, generative artificial intelligence (Gen AI) has become part of almost every conversation about the evolution of technology and how technology will change the way we work.

And while the term has been used loosely to cover all developments in the area, Gen AI does have many specific variations, including programs that fall under the Large Language Model (LLM) technology umbrella. Indeed, LLM technology is capable of understanding large amounts of data and generating human-like text responses even while learning and developing basic patterns.

ChatGPT is arguably the most famous of all the LLM technologies and already has a large number of adaptations that are used to solve problems and complete repetitive tasks. While these adaptations are seen all over the private sector, the government sector has not historically been the quickest to adopt new technology. However, there is at least some appetite among government agencies to move in in the direction of LLM programs, like ChatGPT and chatbots, with organizationally specific adaptations.

As this technology develops, it moves into a space that has little to no regulation. Developers can use their imaginations to see problems in new ways in order to provide efficient and effective resolution, and on the surface that seems very beneficial. However, it is important to keep in mind that government organizations have an obligation to balance efficiency with protecting the resources they’ve been allocated and preserving individuals’ privacy and other rights.

As ChatGPT was progressing in the private sector and the government was considering its options, there was an important stand taken. On October 30, 2023, the Biden Administration released an Executive Order on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. The Executive Order describes eight policy areas and directs more than 50 federal entities to engage in more than 100 specific actions to implement the guidance in those areas.

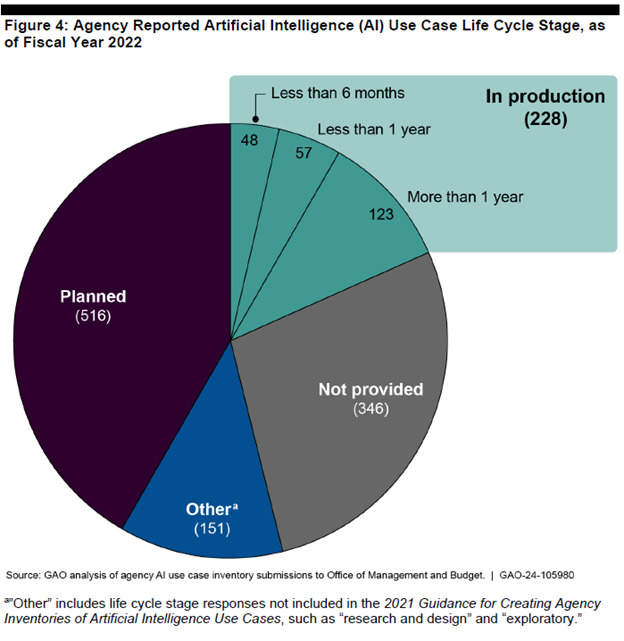

The United States’ Government Accountability Office (GAO) produced a report in December that found while AI can be used to make the federal government more efficient, the technology needs regulation to prevent misuse. Fully 20 of 23 agencies reported about 1,200 current and planned AI use cases, citing specific challenges or opportunities that AI may be able to solve.

The GAO study also found that government agencies are currently using AI in various areas, including agriculture, financial services, healthcare, internal management, national security, law enforcement, public services and engagement, science, telecommunications, and transportation.

Yet, it is important that in all of the excitement surrounding Gen AI, LLM adaptations, and their applications that we consider the risks and how to protect people from them. While there is an Executive Order in place, it does not completely solve the problem at hand — and while it gives some standards and requirements, it is a long way away from policing this arena fully.

“The current Executive Order is a good placeholder. It serves as a starting point for future regulation in the area of AI and Gen AI,” says Ron Picard, a trained engineer and former military contractor who is now a director at EpiSci, a company that develops autonomous technologies. “Regulation for AI is probably going to progress like the regulation of air travel. When the technology starts developing, you don’t know what regulations you need. As we learn what the technology can do, we wind up with an organization like the Federal Aviation Administration that can design the proper regulations, enabling the use of powerful technology while protecting the safety of individuals.”

However, the type of development that Picard is referencing does not come overnight. It comes only after development, implementation, practical action, policy, and then regulation.

With more than 500 agencies in the planning phase, and more than 200 in production, it is clear that the AI initiated processes will be coming to fruition soon. As the development of Gen AI programs continues, it will be imperative to have some guidance as to how to handle this new and powerful tool.

“Over the next 12 months, there will likely be more internal policy changes than actual government regulations,” Picard says. “The technology is evolving rapidly, and we are still learning to enable the benefits and avoid the pitfalls.” These policy changes should cover the initial development of the programs, he adds.

Picard’s personal prediction is that in the next 12 to 18 months LLMs will get some regulation, and he believes there needs to be some guidance around ethical and allowable uses. For instance, to what extent will software engineers for defense companies be allowed to use LLMs to write software for the products they are delivering for the U.S. Department of Defense? And what type of human interaction is required to do proper quality control on that software?

The old adage that slow and steady wins the race may not seem to fit in a rapidly moving technology-driven world. And yet, even the slow and steady have to keep up the pace. It will be important for government organizations to continue to watch the private sector, and then roll out their own policies and prepare for the regulatory action to come.