Advances in AI have allowed for innovative uses in a variety of industries, including law, regulatory compliance, tax & accounting, and other corporate work. So, what's next?

The incredible developments happening today in artificial intelligence (AI) and natural language processing (NLP) are allowing for an increased sophistication of innovative use in legal tech, regulatory tech, tax & accounting, and corporate work. Further, these innovations are challenging our understanding of knowledge work on one hand, and our understanding of collaboration between AI systems and human experts on the other.

The AI industry has started to use the umbrella term human-centered AI to describe the methods and research questions around such concepts as:

-

-

- humans-in-the loop-systems;

- how AI features are explained and understood;

- research on trust and mental models on the interaction with AI systems;

- balancing human domain expertise and AI analysis;

- collective intelligence; and

- collaborative decision-making.

-

Yet, how can a human-centered approach to design, data science experimentation, and agile development be applied to a real-world use case, such as AI-powered contract analysis?

Empowering contract analysis

Contract analysis itself encompasses various activities around contract review, clause extraction, comparisons of positions, deviation detection, and risk assessment.

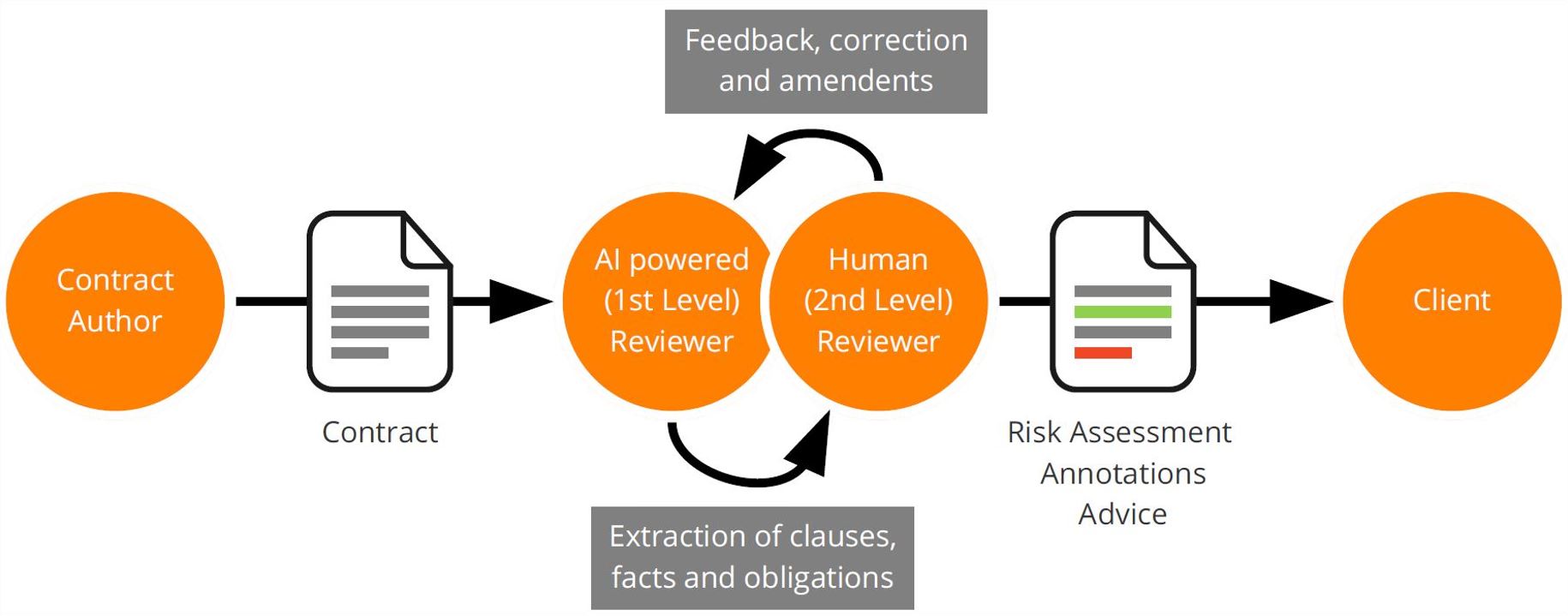

In our research we studied how review and reporting on contracts is structured into finding answers to specific questions — such as which entities are involved in a contract, or what are different parties’ obligations — the answers to which are based on the interpretation and assessment of related legal language. State of the art information extraction methods that apply NLP techniques to identify and extract specific clauses, positions, or obligations can greatly assist such task-driven review.

However, this review and analysis is not a one-way street. While a reviewer benefits from AI assistance, user input such as annotation, acceptance or rejection of AI powered suggestions, or flagging of potential legal issues can serve as good feedback into the system. Ideally, an end-user might engage in a dialog with the machine that does not only speed up the review but makes use of such feedback to improve extraction and analysis algorithms.

However, this review and analysis is not a one-way street. While a reviewer benefits from AI assistance, user input such as annotation, acceptance or rejection of AI powered suggestions, or flagging of potential legal issues can serve as good feedback into the system. Ideally, an end-user might engage in a dialog with the machine that does not only speed up the review but makes use of such feedback to improve extraction and analysis algorithms.

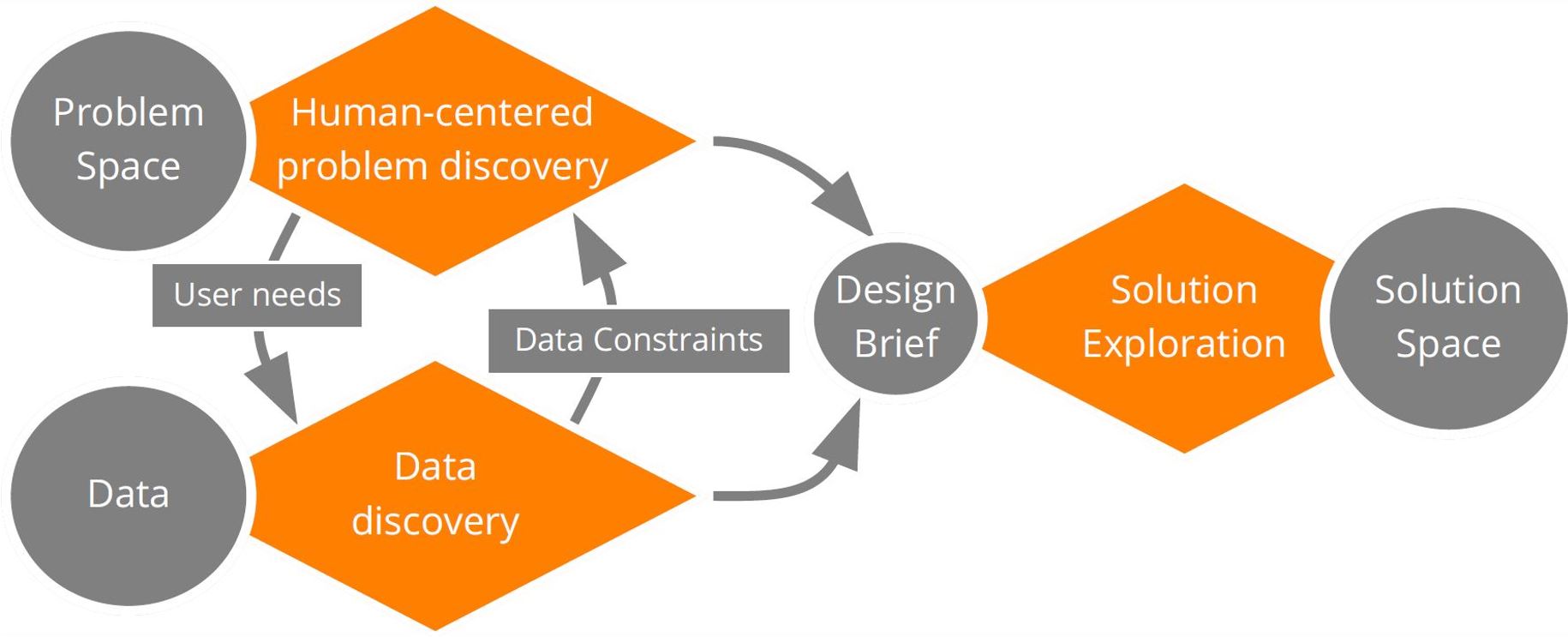

While initial definition and design of an AI-powered system ideally starts with an in-depth understanding of the problem space, user needs, and user goals, successful product innovation builds on lean experimentation and co-creation with domain experts and end-users. In this way, we can structure the design process in a participatory, human-centered way that enables various stakeholders to contribute, evaluate and shape the requirements and design of the system.

Moving to lean discovery

Design and AI communities are sharpening their toolkit for problem discovery and solution exploration. Various methods and techniques that have been borrowed from Human Computer Interaction (HCI) and User Experience (UX) also can be applied to design and experiment methods for AI innovation.

First, however, we start with a focus on an understanding of the information flow and the aspects of distributed cognition that occurs between different stakeholders and end users involved in contract review and analysis.

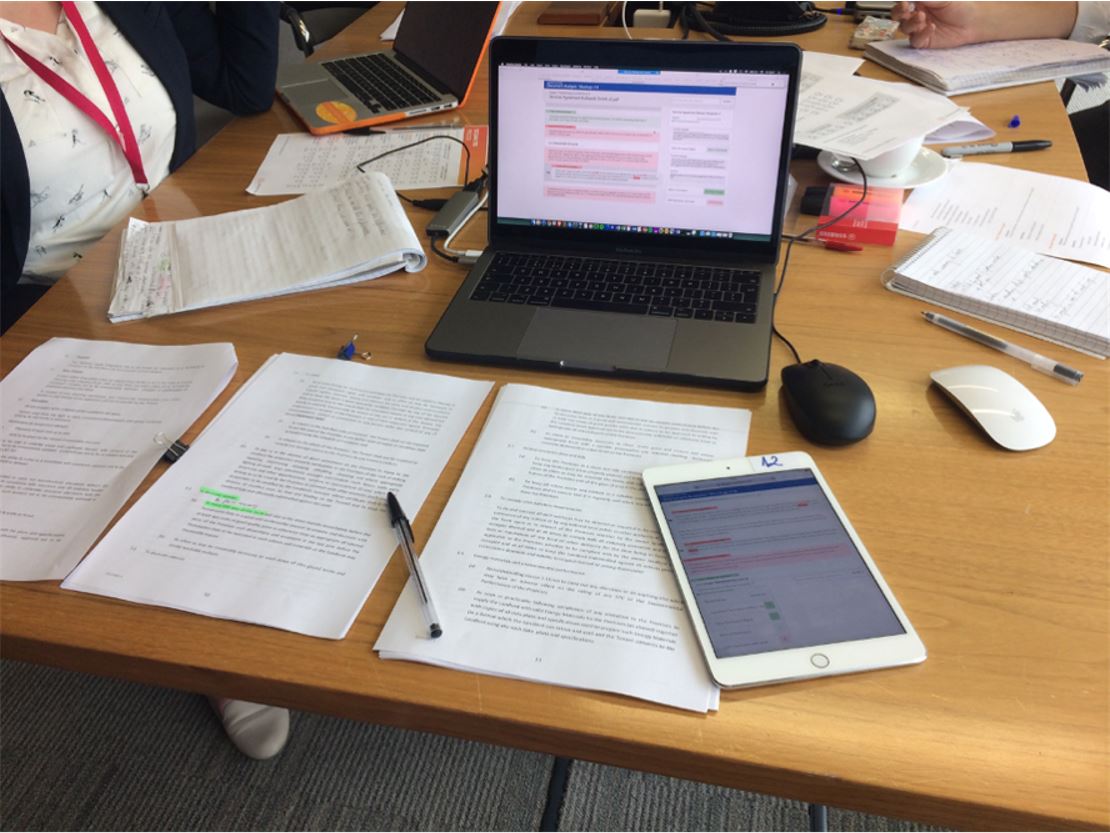

Through shadowing and co-creation workshops user researchers elicit crucial detail about the contract review processes, specific cognitive tasks and handling of information as well as common pain points.

When looking at contract analysis workflows, we might observe core activities in more detail, such as the comparison of a contract under review to guidance and other documents as well as legal professionals’ own expertise and knowledge. Lawyers or paralegals might review contracts based on internal documents such as a heads of terms to identify acceptable and unacceptable positions in comparison to a client-specific playbook. They may also compare a contract to some form of a standard or precedent contracts.

Innovation and research teams can benefit strongly from working closely with subject matter experts, such as legal experts on commercial real estate. Getting a grasp of the legalese and terminology involved in the review, as well as the legal weight of specific terms — such as in the example of a legal lease review the difference to put or keep a premise in good condition, a good repair or substantial repair — might prove particularly useful for the framing of AI research questions that could help provide the right answers to legal professionals.

Innovation and research teams can benefit strongly from working closely with subject matter experts, such as legal experts on commercial real estate. Getting a grasp of the legalese and terminology involved in the review, as well as the legal weight of specific terms — such as in the example of a legal lease review the difference to put or keep a premise in good condition, a good repair or substantial repair — might prove particularly useful for the framing of AI research questions that could help provide the right answers to legal professionals.

Enabling rapid experimentation

Interdisciplinary teams of data scientists, designers, and engineers can explore various alternative solutions and evaluate different aspects of this ongoing process. Data scientists research state-of-the-art AI techniques; engineers explore aspects of production and how algorithms are put into action; and designers evaluate requirements and investigate how to best translate capabilities to the end-user.

A guiding principles for this kind of human/AI collaboration focuses its experimentation on AI-assisted workflows that “keep the human in the loop.” As pointed out by Ben Shneiderman, automation while maintaining some level of human control is not a contradiction. While automating tasks such as search for relevant clauses, clause classification, and facts extraction, ideally as much control as possible still resides with the legal professional and end-user of the system. AI features need to be made accessible and comprehensible for a non-technical audience, so that it is still entirely up to the legal professional to decide which suggestions to use in a report or further analysis. Ideally, this process would be easy-to-use and fall back on more manual workflow and simpler mechanisms, such as simple keyword search or Ctrl-F.

Imagine the notion of a task-driven review that essentially lets the user easily select a number of questions he or she wants to analyze in a contract. Selecting specific review tasks and consciously assigning them to the machine can serve as a mediator, both to explain the capability of the system and to allow an easy interaction between user and underlying AI models. Human-centered design methodology provides a framework to run experiments, making use of mock-ups and semi-functional prototypes that allow end-users to explore data science questions, as well as interaction and display of model output.

Imagine the notion of a task-driven review that essentially lets the user easily select a number of questions he or she wants to analyze in a contract. Selecting specific review tasks and consciously assigning them to the machine can serve as a mediator, both to explain the capability of the system and to allow an easy interaction between user and underlying AI models. Human-centered design methodology provides a framework to run experiments, making use of mock-ups and semi-functional prototypes that allow end-users to explore data science questions, as well as interaction and display of model output.

User testing and productionization

It can be particularly helpful to evaluate any contract analysis system as early as possible, with input from domain experts and legal professionals. Indeed, you might want to focus both on the assessment of the quality of AI models, the evaluation of end-user experience, and the perceived quality of the system. Experimental user studies showed a gain in efficiency and increased levels of perceived task support when using an AI-powered system, as compared to a manual workflow. And of course, early user testing with law firms and legal professionals can inform the design and iterations of any new product.

However, it is crucial to leave enough lead time for designers, researchers, and business stakeholders to flesh out the details of the solution and define the requirements in the first place — before they move on to development. With sufficient resource and time, further questions can be explored as the development cycle goes forward.

The idea to “start small and scale up later” is yet another core concept that might help users focus resources early on. In the context of contract analysis, users might want to focus on specific document review use cases, such as due diligence, re-papering, or contract negotiation; or in specific practice areas or domains, such as real estate, service license agreements, or employment records. Once a system works, it can always be scaled to other use cases, capabilities can always be added post-launch.

The idea to “start small and scale up later” is yet another core concept that might help users focus resources early on. In the context of contract analysis, users might want to focus on specific document review use cases, such as due diligence, re-papering, or contract negotiation; or in specific practice areas or domains, such as real estate, service license agreements, or employment records. Once a system works, it can always be scaled to other use cases, capabilities can always be added post-launch.

The future of human-centered AI

AI systems offer fantastic opportunities to support and assist professional workflows. It is crucial, however, not to ignore the incredible value of human expertise and professionals’ ability to relate information to a broader context and to “connect the dots”.

Taking a human-centered approach, we can inform the way forward into a future for knowledge work and professional services that builds on collective intelligence and human/AI collaboration.

For legal tech innovation, this human-centered approach and a focus on systems that “keep the human in the loop” seems particularly appropriate. Legal evaluation, risk assessment, and legal advice require assisting systems that are explainable and interpretable. Audit trails and design patterns that allow end-users to overwrite machine suggestions, offer feedback, and re-train models will go far to support better understand and trust in the process — and ultimately increase the adoption of AI systems that assist legal work.