Research and innovation are frequently viewed as similar if not identical, yet they are uniquely different. Moving from AI pilots to production-scale demands iterative, building-block roadmaps driven by requirements and altered by unprecedented rapid-cycle products and services

There are two words that elicit dozens of definitions and spark action across corporations and academia — research and innovation. In our continuously iterating and rapid cycling of artificial intelligence (AI), these terms and their characteristics are used interchangeably, driven by the unprecedented advancements across all spectrums of AI. While clearly understandable given the last 18 months of prototyping and piloting AI-defined industry solutions, when defining use cases for production-ready solutions, does it really matter?

The impacts of AI across economies and the employment markets will be profound. The International Monetary Fund (IMF) stated that it believes that up to 60% of labor markets in developed economies will be materially impacted in areas of wages, responsibilities, advancements, positions available, and humans-in-the-loop. However, across the nascent AI ecosystems of commerce and solutions, where does research end and innovation begin? And why should we care given the rapid-cycle AI advancements announced every week?

For enterprises that are seeking to leverage AI, the distinction may appear to be an esoteric one. As I’ve mentioned previously, this could prove terminal given the unique differences between traditional research and innovation approaches, objectives, measurements, and inclusions. To understand the discrete differences, industry leaders need to do what they do best — address their opportunities and challenges in the context of a holistic roadmap.

Navigating an opaque roadmap

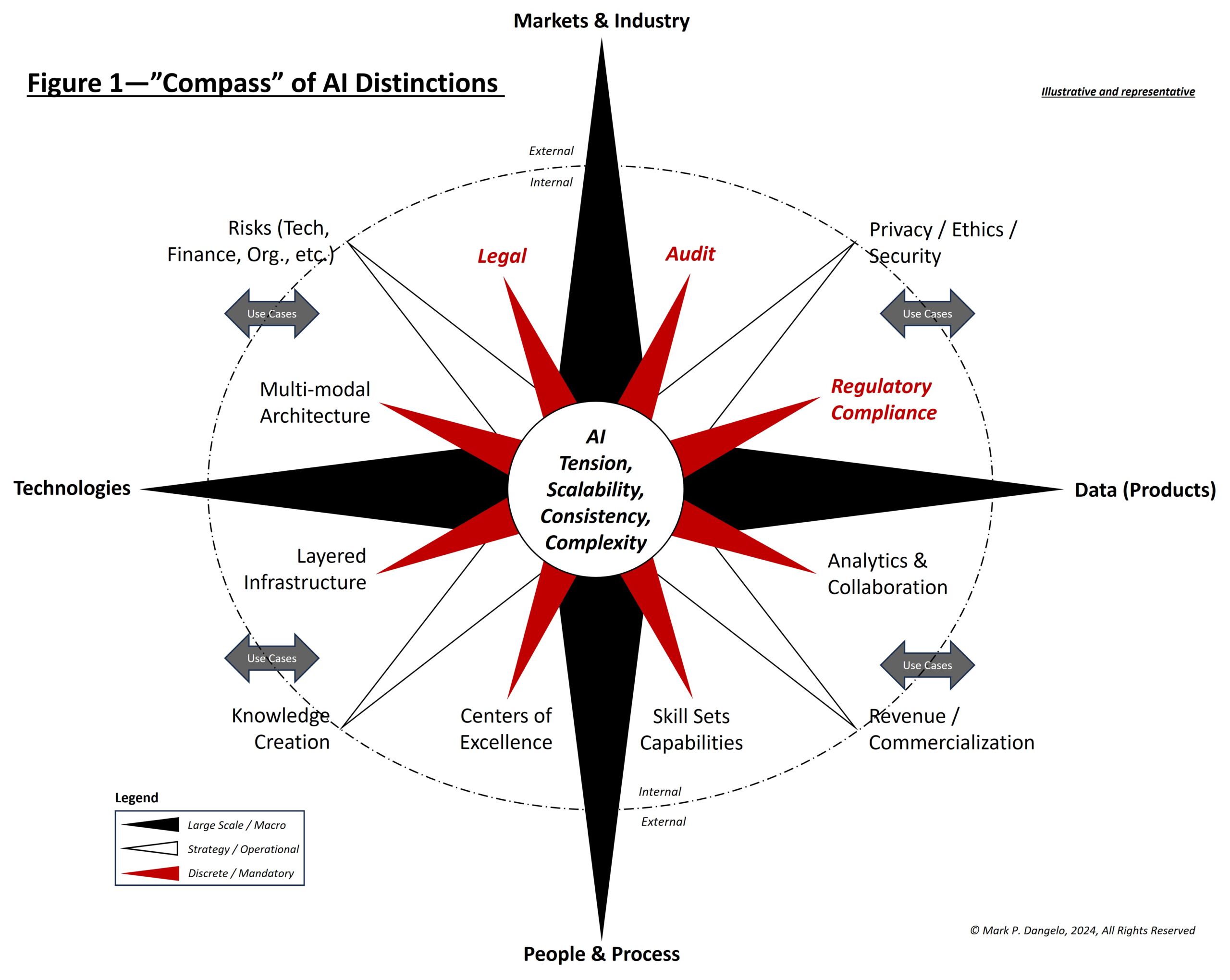

Yet when it comes to AI, leadership personnel are working with unfamiliar touchpoints, operating parameters, fragmented capabilities, and complex skills. Stated differently, the old has become obsolete, and the new AI landscape is still evolving and opaque. To put AI (and its use cases) into the proper context, leaders need to find a sort of compass that’s designed to include AI not just for short-term benefits, but for long-term adaptability, auditability, and inclusion.

Figure 1 (below) illustrates a conceptual compass analogy framework that distinguishes between three major taxonomies of AI purpose and impacts: i) external, large-scale macro trends; ii) internal, strategic, and operational characteristics; and iii) the more common discrete and mandatory AI solutions that currently captivate vendors and researchers within the enterprise.

Additionally, hidden within Figure 1 are the implications of why differentiation between research versus innovation is a very material challenge for industry leaders as they seek to scale their AI piloting efforts to larger, cross-linked production systems. A failure to address the cascading, interconnected impacts of vast data that will be used to deliver AI-powered industry solutions replicates the mistakes of past IT generations.

From data quality to an inability to reuse competing production systems of record, using AI that is not interoperable nor part of a compartmentalized compass approach will result in outcomes that are not adaptable. And because AI will be constantly changing as the data needed for ingestion and processing also changes and explodes in volumes, adaptability is critical.

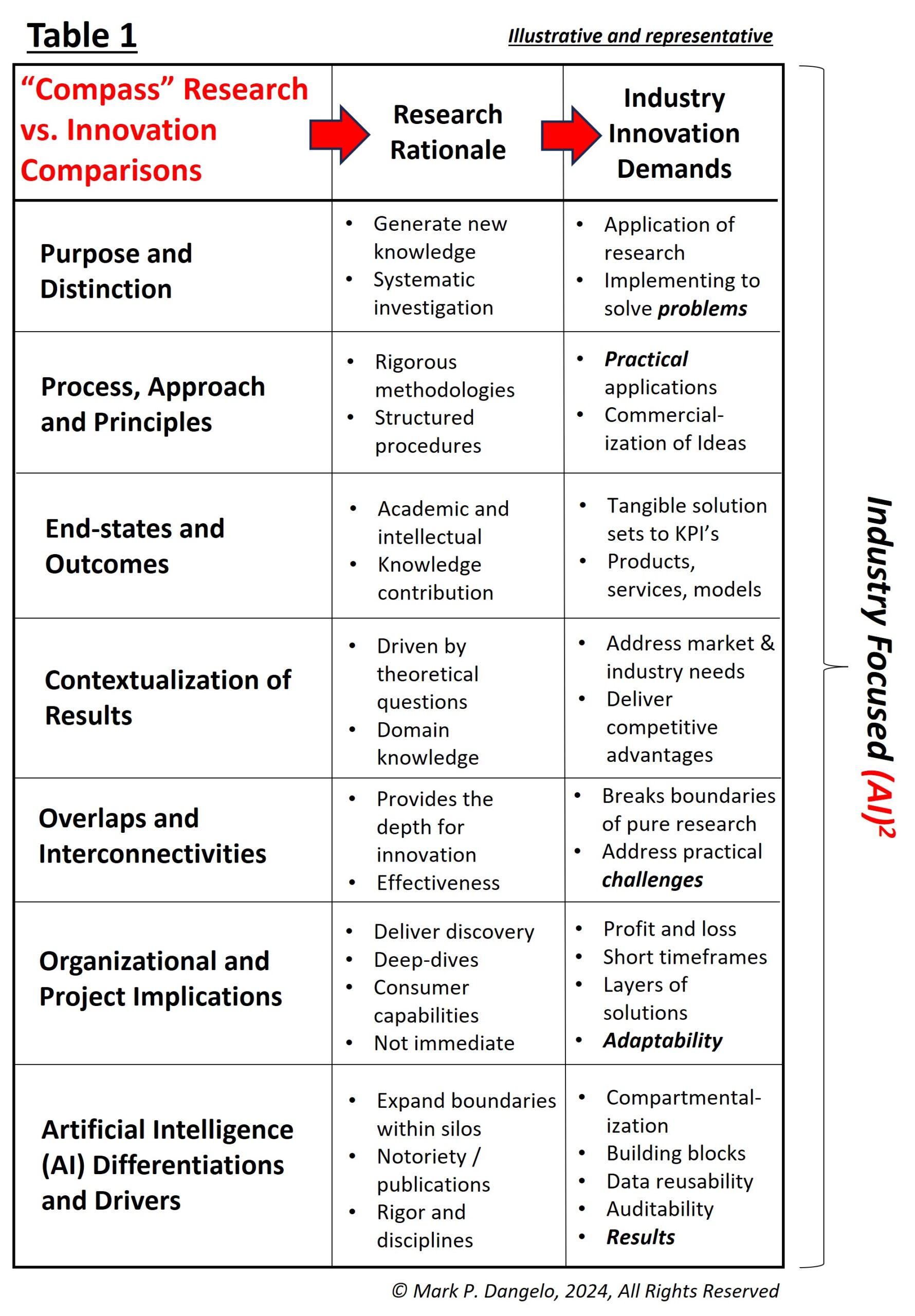

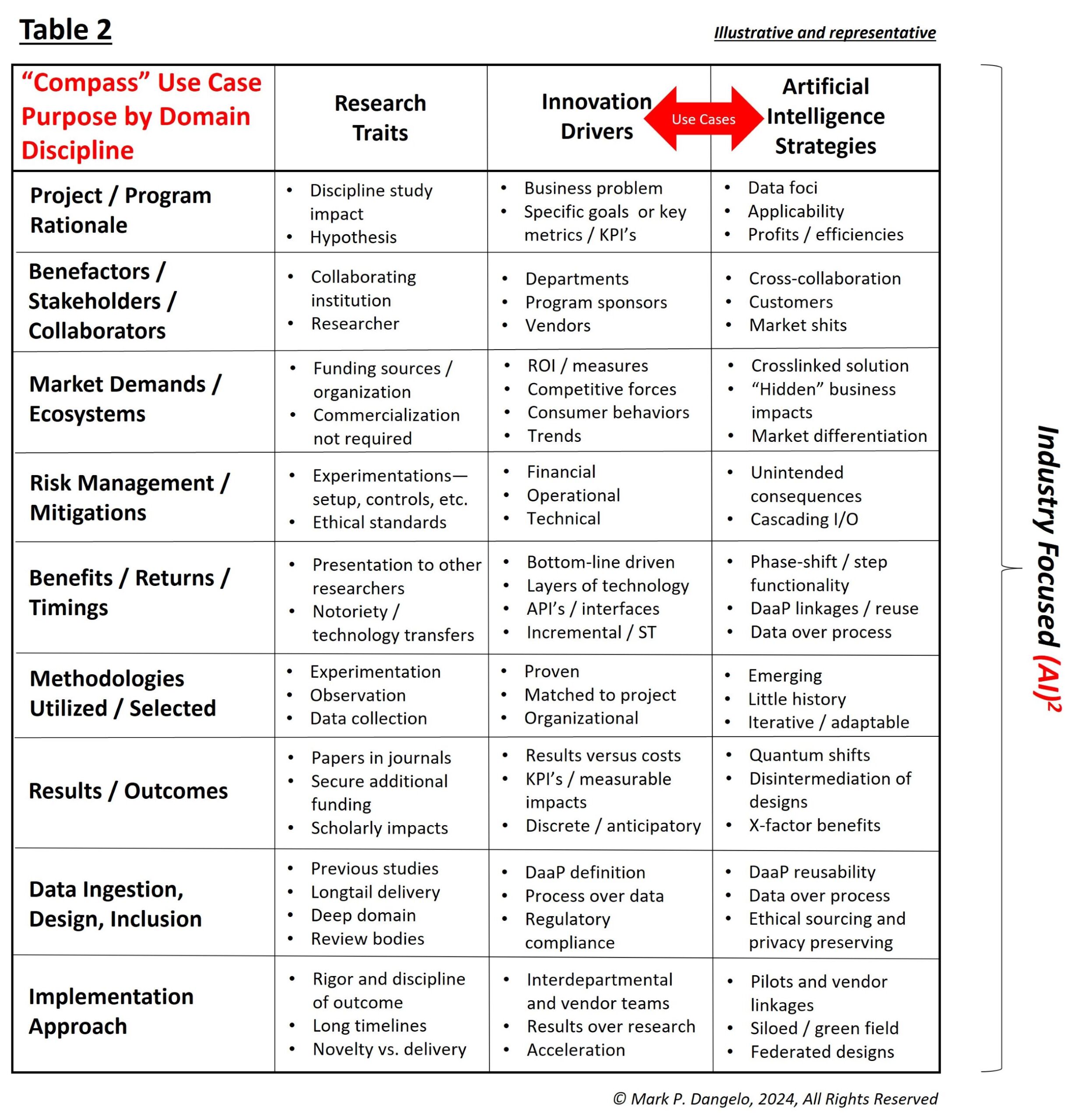

Indeed, the real distinctions and purpose of creating AI use cases that target industry outcomes are showcased in the representations within Tables 1 and 2 (see below).

While it seems like AI has matured quickly, it is still embryonic in its current state. With the explosion of generative AI (GenAI) in 2022, the research methods that created its industry applicability took on a ubiquitous application of implementation in a rush to adopt, implement, and competitively differentiate products and services. Many assumed that in order to deploy production-ready industry solutions, the methods and techniques showcased as part of AI solution introduction was the preferred approach that would deliver results.

Adapting to changing requirements

However, as we are now learning and actively challenging, the original purpose and intent are now adapting to vast, changing industry requirements and objectives. To illustrate these directional shifts across both external and internal classifications, granular comparisons between research intent and innovation delivery must be internalized by AI leaders and their delivery teams. Many of these distinctions in the following tables are just becoming evident due to the post-implementation analysis of early stage, production-scale AI initiatives.

Table 1 sets the foundational distinction between research and innovation when deploying a holistic Figure 1 compass design. What is noticeable are not just the principles of purpose items in the far-left column, but the rationale differences illustrated between research and innovation columns’ approaches and purpose.

Additionally, AI is now becoming a cascading series of solutions that feed both input and output to other AI systems. These waterfall designs are also invoking a need for different logical architectures and physical infrastructures that model a federation of systems each with its own data, rules, and governing compliance. These industry realities — essentially, the stacking of AI systems — are why shifts of purpose, reusability, and outcomes will materially impact early-stage internal strategies that centered on audit, regulatory compliance, and legal (see Figure 1 above) from research implementations through to continual innovational designs.

The bolded items in Table 1’s innovation column showcase why adopting a research approach for AI within industry will often deliver sub-optimal results. These items represent the starting point for the industry implications for results over research, which themselves are showcased in the use-case breakdown structures in Table 2. This means that to construct relevant and adaptable use cases amid continually shifting AI technologies and designs, industry leaders must merge their plans of attack from being just innovative drivers to also include AI strategies.

Table 2 is packed with distinctions and lessons learned over the last 18 months. It also represents a bifurcation of academia and industry that has been tightly coupled with the launch of open source GenAI platforms. Whereas researchers want to continue to conduct deep dives into AI sciences, industry innovators and market influencers recognize that there is a pragmatic shift due to the breadth of market capabilities, vendor offerings, and data use that can be custom-designed for industry implementations.

For many leaders who are just now feeling confident in their AI strategies and approaches, the expansion and maturity of AI’s data-driven ecosystems is just beginning. Organizational leaders that fail to differentiate when, why, how, and who will lead distinct corporate research activities versus work-process innovation programs will struggle to properly leverage investments in technology, people, and even their consumer base.

In the end, research versus innovation is not an opaque discussion when it comes to rapidly advancing AI capabilities. A failure to clearly delineate which one to use and when to blend the two together will be key challenge for any enterprise striving to harness the ultimate data-driven solution that is today all lumped underneath the umbrella of AI.