In this follow-up to an earlier blog series, we speak to Michael Gialis, a Partner at KPMG, about how AI-enabled regtech can impact the day-to-day workflow of many auditing and tax & accounting professionals in profound ways

In the world of artificial intelligence- (AI-) enabled solutions, the quantum advancements surrounding innovative designs and outcomes have captivated attention beyond traditional technologists and IT staff.

The results and hype have spurred action across many C-level leaders, board members, and even audit and tax professionals. By leveraging AI data and technology, discussions now concentrate on predictability, leveraging and assessing vast amounts of data with certainty, and the processes and decisions made at a granular product and service level.

Decision-makers are no longer satisfied with a sampling subset to ensure viability, adherence, and compliance with vast rules and regulations — they are looking for intelligent, data-driven solutions that use all data available. What’s more, across the skills and markets of regulatory technology (regtech), leaders are realizing that the focus on generative AI is just a starting point when it comes to AI-enabled regtech and the discussions surrounding data sampling and compliance.

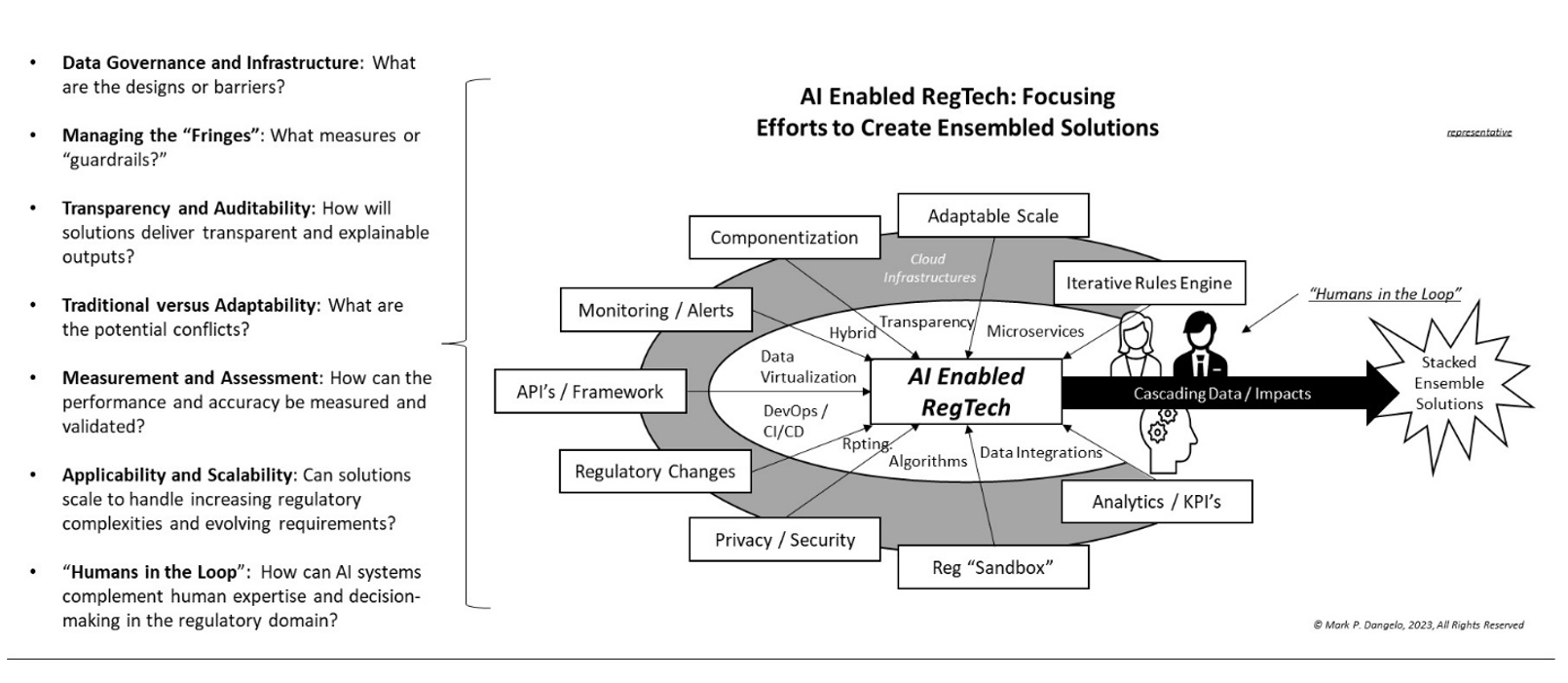

As noted in prior articles, in assessing the balance between humans and skills, AI and data, and prediction and technology, there are seven core areas that need to be addressed for any organization seeking greater AI data-driven decisions within regulatory compliance.

These categories and targeted questions were proposed to Michael Gialis, a Partner at KPMG.

Mark Dangelo: How can organizations ensure data quality, integrity, and security when using AI-enabled regtech?

Michael Gialis: When advising our clients, we encourage them to consider the benefits, costs, and risks of employing AI in their workflows. It is important for all to understand that the capabilities of the tool are tied to the quality of the data that it has been trained with, has access to, and is prompted by. The quality of the output, the ultimate utility of the tool(s), and the appropriateness of the application or use-case are correlated to the associated confidence for the risk/reward of the circumstance.

The prerequisite to digital transformation is the achievement of data integrity and securitization. Digitalization, or the automation of workflows, requires digitization. AI has the promise to effect digital transformation because it has the interpretive capability to effect digitization and potentially the ability to execute workflows autonomously. Yet, without data integrity and securitization, the promise is limited or unrealized.

Mark Dangelo: What measures or “guardrails” should be in place to handle sensitive and confidential information appropriately, which may be ingested as part of a robust and expansive AI regtech effort?

Michael Gialis: Among the preparatory fundamentals is to understand the license agreement of the AI provider. Provisioners of the environments need to be able to answer some basic questions such as, what happens to data that the application is trained on, has access to, or is prompted by? How do we evaluate the quality of the input? What procedures are in place to validate the accuracy of the output not only at instantiation but over time?

Part of the utility of AI is the ability to access information across functional silos and effect a compilation of such data. Source references and repeatability are common means of assessing confidence. Often, AI does not provide such references as the nature of the tools are probabilistic and so outputs are inferred. These contradictions represent paradoxes that require new frameworks to be developed.

Mark Dangelo: How can organizations ensure that AI algorithms are not “black boxes”? And can their functions, answers, and by design constant adaptation be audited, explained to consumers, and validated by industry regulators and stakeholders?

Michael Gialis: Some AI solutions do provide references. However, there is a distinction between employing data in support of telling a story or expressing a point-of-view and developing a story based on probabilistic outcomes.

Constraining or relaxing the data accessibility, prescribing the user community, and targeting and tailoring the training of the AI model will alter the responses and are means of risk mitigation but also create constraints. AI affords transformative potential and undefined opportunities for workflow enhancements.

More effective is the role of the proficient human-in-the-loop, who can rapidly discern the accuracy of a response. When we consider the scientific method and its application to modified workflows that could benefit from AI, we are able to establish confidence. This confidence is achieved despite limitations in AI opacity by recognizing tool capabilities. Adapting the workflow cognizant of tool capabilities and limitations, training the human-in-the-loop, defining use-case conditions, employing workflow governance all improve contextual confidence.

Mark Dangelo: What are the potential conflicts between AI-driven decision-making and regulatory frameworks?

Michael Gialis: Workflow transformation implies workforce disruption. However, this does not necessarily take the form of job loss as much as skills evolution. This evolution occurs by being able to operate alongside, with the benefit of, and in judgment over these new capabilities. Workflow transformations will drive an evolution in our talent requirements and up-skilling. In this rapidly evolving market and technology segment, the nature of the tool is one of constant evolution.

Workflow transformation implies workforce disruption. However, this does not necessarily take the form of job loss as much as skills evolution.

We’ve already started to witness the notion of drift or a possible perceived performance degradation in AI that may be attributable to the nature of the queries to which the tools are exposed. This speaks to the need to attend to the training and exposure of the AI over time. Indeed, this mechanism of developing the AI over time can become part of the tool-value attributed to your organization.

Mark Dangelo: Can AI-driven regtech solutions scale to handle increasing regulatory complexities and evolving requirements?

Michael Gialis: It is not accidental that current AI options have been released in some form of test or pilot release variants. Inherently, part of the benefit from these tools is the machine learning attribute. Consequently, the fact that these applications are employed in a test or training context allows and warrants improvement of both the skills of the user and the application itself.

The parameters of a regulatory use case would lend themselves well to an AI solution because of its adaptability, including the training and governance, considering the above-mentioned parameters. Additionally, we have found that certain data can be tapped multiple times for different regulatory and business purposes.

Mark Dangelo: How can organizations strike the right balance between automation and human oversight to achieve optimal results, while ensuring that they have the skills and capabilities necessary to sustain results and necessary adaptations?

Michael Gialis: Workflow identification is one of the earliest steps in determining the opportunity associated with AI-enhanced workflows. Our clients often generate use-cases that form the hypothesis of the scientific method-based framework previously mentioned. Many of these use-cases imagine a state of employing AI as an augment to humans-in-the-loop.

In the present to medium term, we expect a risk-based understanding of workflow enhancements, coupled with a value-capture expectation or probability to serve as a guide to the level of human-in-the-loop process participation.