In 2024, AI — which excelled in pilot programs — has met the reality of organizational capabilities, interdependent systems, and scalability. Making outcomes worse are emergent technologies, scarce skill sets, and use cases that cannot adapt to rapid cycle ecosystem requirements. Is there another approach?

Why in 2024 does 80% or more of all piloted artificial intelligence (AI) initiatives quickly lose efficacy when scaled into a production setting? Noted caveats and challenges for these fail-to-scale innovations include unanticipated data ingestion designs, fragmentation of data storage, non-linear data processing complexities, and an inability to anticipate the active data governance automation and rules-fueled oversight. None of which is trivial and not without industry-experienced skills and context.

Beyond the demand for generative AI (GenAI), beyond the efficiencies sought across the operational processes, and beyond AI ethics, bias, and hallucinations is the question: Are the scalability and performance failures linked to their architectures (centralized, distributed, or decentralized), or the data utilized to train models and make intelligent decisions? Is it a causality of AI platform assumptions, inadequate independent testing, or advanced skill sets tied to model realities?

The lessons learned are that AI requires a holistic approach to its deployed purpose, goals, and measurements that often conflict with traditional infrastructures, organizations, and regulatory compliance. To ensure that vertically defined AI systems participate across organizational functions — such as legal, audit, and regulatory compliance — the cascading, adaptable prompts, inputs, and outputs must be designed and anticipated before performance and scale are achieved.

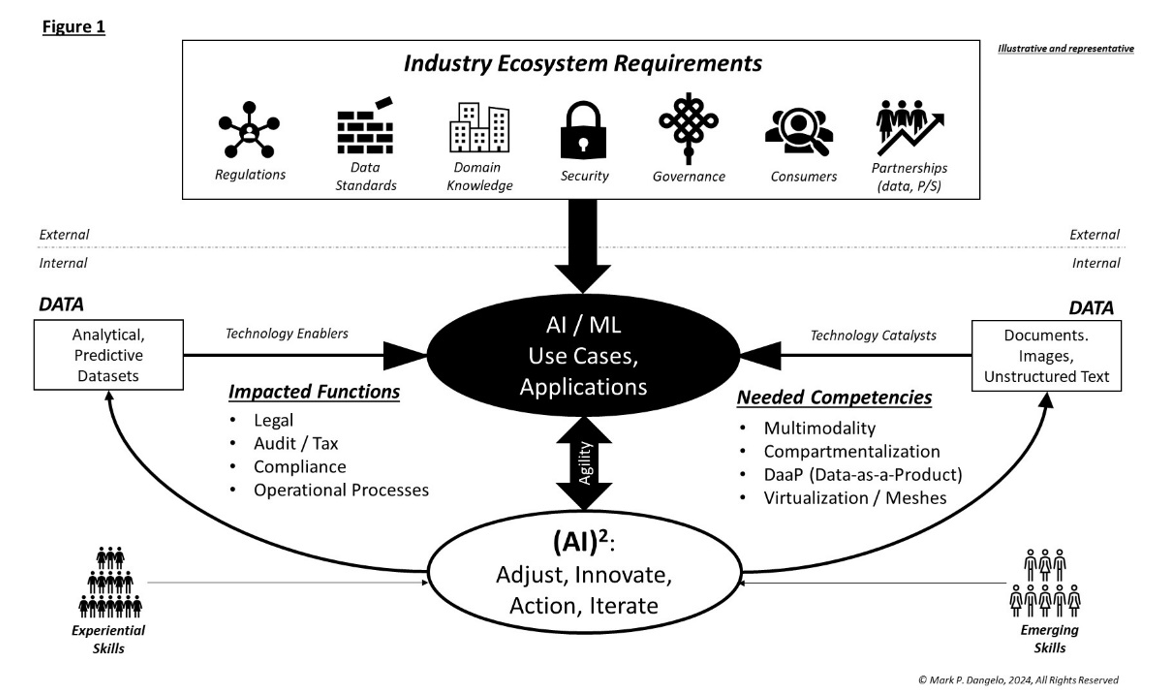

The design assumptions that AI scale and performance are singularly about clouds, servers, chipsets, and speed fails to recognize the multimodality of demands and technologies, which must be precisely interconnected when compared to established application design ideation approaches. To move beyond the 2023 prototypes and pilots and into robust, transparent, and adaptive AI production capabilities, a holistic approach (like the one shown below) must be followed.

Indeed, Figure 1 illustrates the multiple anchors that continually define and reshape AI. Starting with the industry ecosystem requirements, the downstream impacts on the use cases set the designs and inputs necessary for not just initial release but for data inclusion. During the 2023 internal piloting stages, the inclusion of multiple data types and technologies also impacted the efficacy of the use cases. AI deployment within a silo that does not account for continuous system-learning and model growth produces great promise but an inconsistent return on investment (ROI).

Indeed, Figure 1 illustrates the multiple anchors that continually define and reshape AI. Starting with the industry ecosystem requirements, the downstream impacts on the use cases set the designs and inputs necessary for not just initial release but for data inclusion. During the 2023 internal piloting stages, the inclusion of multiple data types and technologies also impacted the efficacy of the use cases. AI deployment within a silo that does not account for continuous system-learning and model growth produces great promise but an inconsistent return on investment (ROI).

Additionally, we also note that use cases are not static. Using an (AI)2 practice (one that incorporates the concepts of adjust, innovate, action, and iterate) improvements to feedback are leveraged across not just use cases but across the entire internal AI sphere of discrete applications that then cascades back into the data and technology. We can also see from the representation that within the core loop, there are impacted functions and new competencies that must be part of production deployment.

Taken together as a deployment model for the impacted functions, we arrive at potential use cases. Yet we also need to address the implications of AI demands and competencies that underpin and anchor advancements and cascading interconnectivity. To this end, three tables that address these impacted functions are provided below to illustrate why pilot programs will continue to struggle and fail.

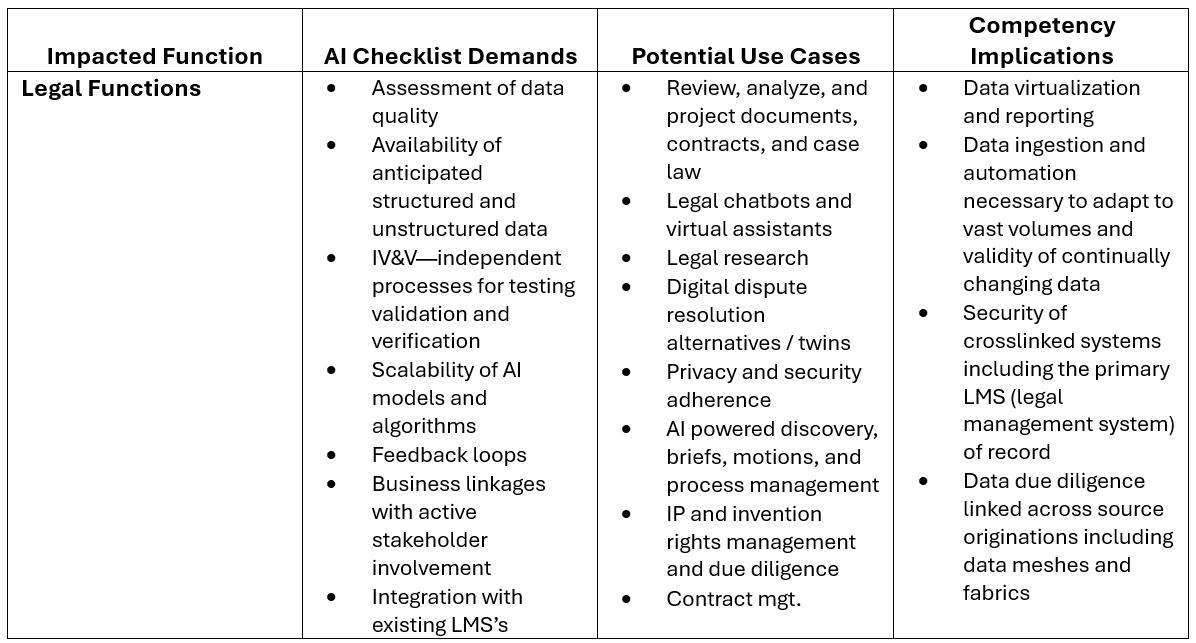

Legal functions (representative and illustrative)

Table 1 represents a few of the current and emerging use cases within the siloed legal area. However, as we will note in Tables 2 and 3, there are implicit overlaps and consistency — especially within the data — which, if leveraged, could not only improve the move-to-production scale, but also provide balance and the controls necessary across interconnected AI silos. For executives seeking budget leverage, the following tables — when viewed deploying GenAI, neural networks, machine learning, and robotic process automation (RPA) — showcase a need beyond siloed point solutions.

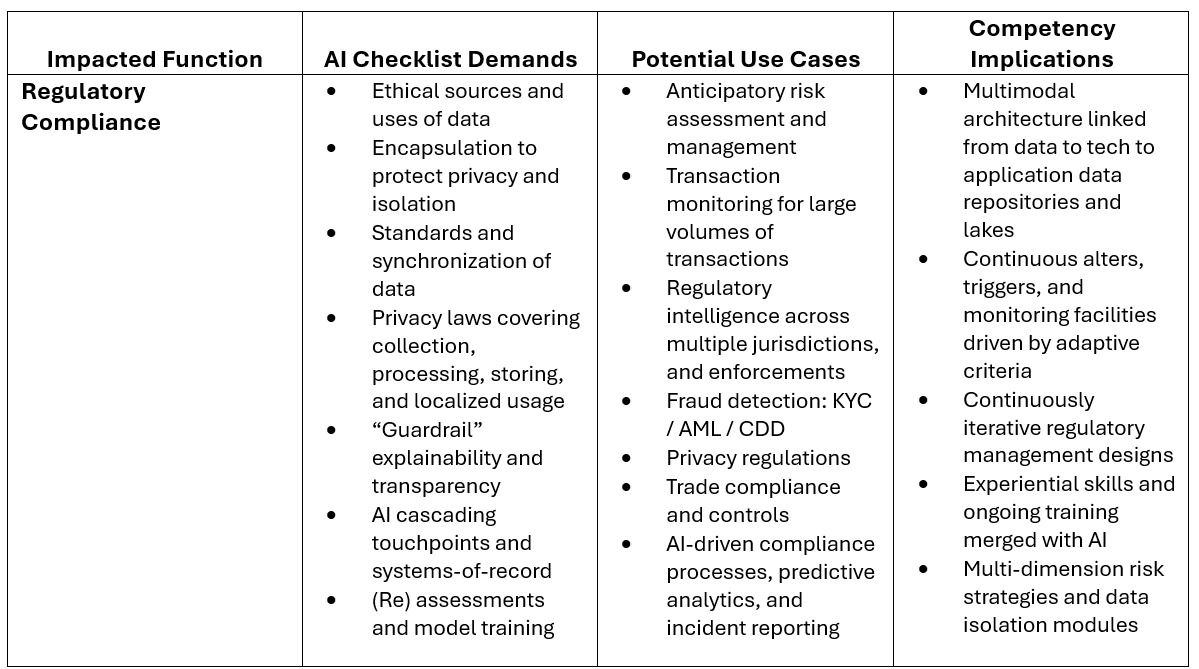

Regulatory compliance (representative and illustrative)

Between Tables 1 and 2 we note dependent checklist demands and competency implications. We can deduce data common across the functional disciplines, yet are we confident that the pilots were trained on linked and vetted data that span both? Were these AI designs dealt with consistently? More importantly, if 60% to 80% of ongoing costs reside in maintenance, then what happens to both when they change? Table 3 shows a third triple functional commonality for every enterprise regardless of industry — the audit function.

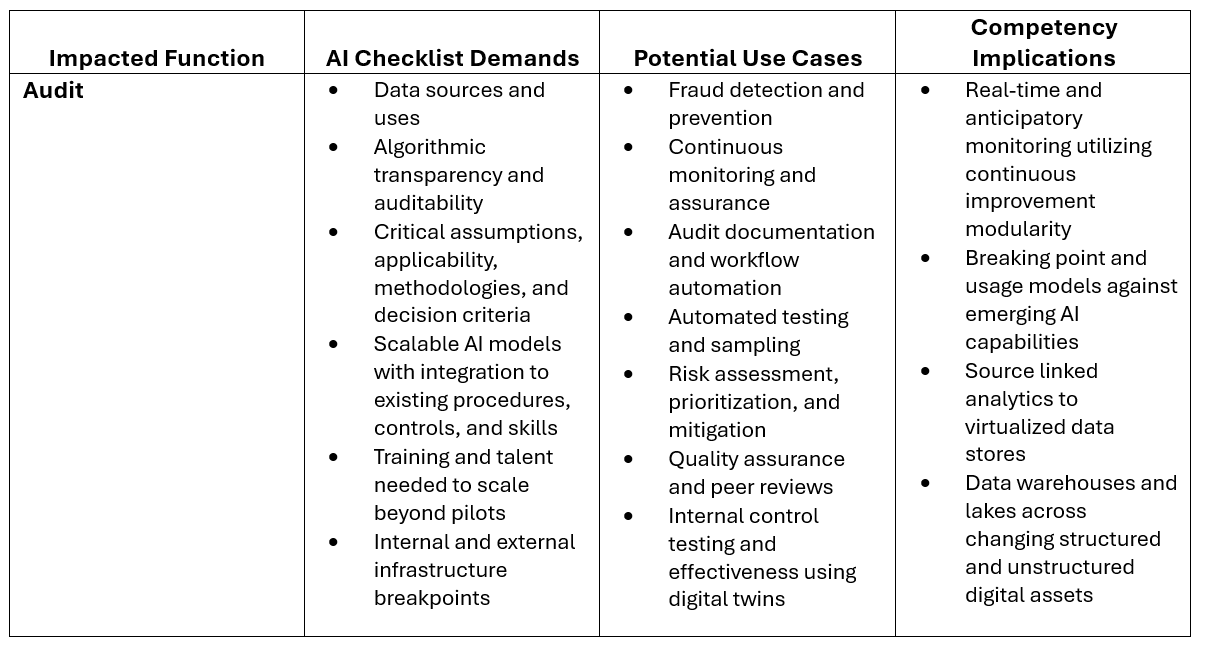

Audit (representative and illustrative)

Now we can begin to understand that AI isn’t a magic button that is easier than traditional application solutions. Three functions, three tables all with unique use cases, yet all having varied but interconnected data, checklists, and competency implications interpreted by diverse AI technologies. What could possibly go wrong, fail to scale, or deliver improved accuracy?

In the end, and stripping out the algorithms of intelligent decision-making, AI is fundamentally a next-gen data-driven system. It comprises layers of structured and unstructured data both inside of and external to the operating environment. Meaning, if outcomes of AI solutions and their continual learning can be altered by changing the data it ingests and processes, impacting even the cascading inputs and outputs of upstream and downstream systems, then is it the same AI system that was piloted, along with the same biases, hallucinations, and errors?

The scalability of AI is all about the aforementioned. Yet, we also see that the legal function touches regulatory compliance which touches the audit function. Now how, who, what, and where will organizations ensure that consistency and synchronization across their use cases are delivered consistently, accurately, and with adaptation?

In Figure 1 the holistic approach was presented to deliver consistency and adaptation across all AI environments — not just a within business unit or functional model. Enterprise data management and multimodality architectures for AI promote consistency, but this is today counter to academic and industry practices. But why, beyond its difficulty and complexity?

AI holds tremendous potential, and that will not change. What needs to change are the methods and techniques to ensure cross-functional and platform consistency. And that checklist demand resides with the organization, its leaders, and its experiential internal staff. And for that, 2024 will be the litmus test of AI beyond the easier-to-grasp technological advancements.

For more on the impact of GenAI, you can download the Thomson Reuters Institute’s 2024 Generative AI in Professional Services report, here.