The proliferation of GenAI and large-language models pose a massive security threat, and while upcoming regulatory frameworks will help, companies can prepare today through zero-trust architecture that ensures "Never trust, always verify"

The 2024 Report on the Cybersecurity Posture of the United States, released by the White House in May, further emphasized the vigilance by state and non-state actors that may be mitigated by actions in our control through proactive cyber-incident management, remediation of vulnerabilities, and enhancing resilience.

Indeed, the proliferation of generative artificial intelligence (GenAI) and large-language models (LLMs) pose another tangled web of liabilities — such as jailbreaking and prompt injection attacks — that jeopardize the sanctum of privacy, opening the door for bad actors to wreak havoc, exploit weaknesses, and reveal personal data.

Principles of zero trust

While various regulatory frameworks for governing GenAI are works-in-progress — such as those being created by China, Japan, the United States, and others — they may provide limited assistance in combating bad actors. Encapsulating GenAI models with a zero-trust architecture provides various security perimeters and outer layers complemented with inner layers, which exhibits challenges for bad actors at every step, although still isn’t a panacea. Zero-trust architecture should include governance risk & compliance and data loss prevention control measures in contributing to the overarching war against cyber-criminals, fostering the culture of Never trust, always verify.

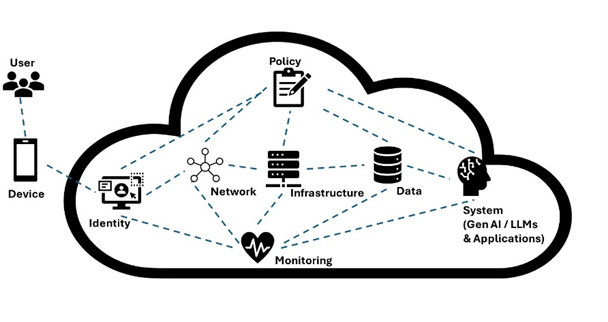

Contextualizing the primary principles of zero trust as defined by the National Institute of Standards and Technology (NIST) for GenAI by security perimeter, may consist of: i) policy, ii) identity, iii) network, iv) infrastructure, v) system, vi) data, and vii) monitoring. The principles are intertwined during implementation of zero trust.

Enterprise policies should be dynamically defined, modulating at the frequency of regulatory requirements, inclusive of privacy, system protection, data, and user communities. Identities should be centrally managed in accordance with identity access management, defined by role-based access control and attribute-based access control, as well further enforced using multifactor control.

The network should employ micro-segmentation, isolating every system, such as an application containing GenAI models, to limit the blast radius and lateral movements of any attacker. The underlying infrastructure of systems supporting GenAI models should be deployed using containers hosted on virtual machines which further natively remove certain types of attacks.

Systems developed using serverless technologies deployed using the techniques mentioned above, inherently prevent other attack types in cloud-native ecosystems. Discovery of all data throughout an enterprise should be categorized, labeled, and protected by policies governed by data loss prevention, limiting access to sensitive data to only those who absolutely need it. Monitoring utilizing the SOAR (security orchestration, automation, and response) and SIEM (security information and event management) tools are critical components for the overall GenAI system, alerting based on attack vectors and further supplemented with a mature incident management response.

GenAI’s zero-trust playbook

Secure GenAI investments transitioning from Steps 1 to 4, improving digital safety through an organization while elevating trust with its clients.

1. Data

Data governance begins with capturing the organizational goals, stakeholders, key performance indicators, and the definition of success. The organizational privacy policies should be aligned with data provisioned for usage by AI models, while the stakeholders should confirm agreements for usage by AI models and the output from the models. Stakeholders should confirm that respective processes for safe AI are executed, such as responsible and ethical methodologies, that should result in debiased insights or recommendations from the models.

After capturing the governance structure, data discovery should be performed across the digital estate, identifying sensitive data such as Social Security numbers, account numbers, payment card information, address, and government issued identification. Classifiers may consist of automatic pattern recognition, via machine learning, and they may be pre-trained, out-of-the-box, or custom trainable. Upon classifying the data, identify what’s relevant for ingestion by the model as well as output from the model, and define data loss prevention policies to limit access to the respective data sources. Any data used by the AI models should be isolated within secure containers and accessible via micro-segmented networks, to limit the blast radius.

2. Identity

Define security policies via identity access management and data loss prevention, prohibiting access to GenAI models as well as their inputs and outputs, by role-based access control or attribute-based access control, while restricting data formats. Use entitlement management to define specific roles or assign users per role, granting permissions to applications, data, and models.

Federated identification may also be used, but regardless of the identity access management approach, identities should be validated via identity providers or brokers, who confirm their authenticity. Also, incorporating device fingerprinting may be further beneficial in analyzing user behavior, such as browser type, device type, and IP address, thus reinforcing identify verification. In addition, secrets, key management, or certificates may also be employed to strengthen identity verification.

3. DevSecOps

DevSecOps — the integration of security practices into every phase of the software development lifecycle — ensures appropriate governance and robust security testing. Automated testing should include scenarios synthesized for privacy, code of conduct, and safety, while reinforcing regulatory and compliance requirements. The SecOps process should be orchestrated through formal continuous integration and continuous deployment (CI/CD) automated tools, ensuring consistency of packaging within containers, vulnerability assessment testing, and testing threat attack vectors, while maintaining proper version control the container images. The threat attack vectors should be defined for the respective AI models, simulating different scenarios such as prompt injection or jailbreaks.

The containers should have task segregation while the respective virtual machines hosting them provide full security isolation, all of which are then deployed within virtual networks that are also isolated and segmented. Identity access management should be enforced for the respective containers, such as by using key vaults, secrets, or certificates.

4. Monitoring

Refreshing your cybersecurity investments for GenAI should include provisions for data loss prevention, incident management, and other security factors. The inputs and outputs of your AI models should be regulated by your provisions, alerting due to anomalies, while positioning your organization for a rapid recovery as result of any incidents. Cloud access security brokers can provide granular monitoring of the cloud usage services for AI models and their cloud native architecture. The security broker facilitates managing entitlements, accessing controls, detecting unusual activity, as well as preventing data loss via its data loss prevention capabilities, based upon policies defined to protect the organization from usage of sensitive data.

Subsequently, virtual machines and containers hosting AI models should also be monitored, using predefined dashboards which may further aid the collecting and analysis of metrics for performance, resiliency, and networking. Selectively, profilers may be used intermittently to assess performance of containers. AI models such as LLMs should be evaluated for hallucinations and content safety, ensuring outputs are responsible and ethical, as well as trustworthy.

In accordance with data consumption by AI models, data loss prevention and governance risk and compliance best practices should be employed. And where abnormal behavior is detected the respective data security and loss prevention policies should reinforce least-privileged access with alerts or notifications. In addition, active monitoring of changes to legal or regulatory requirements per geographic region may impact compliance standards, resulting in potential privacy violations by AI models and respective data dependencies.

Where do we go from here?

As organizations iteratively improve security posture, cyber-criminals also continue to advance their efforts, modulating at a faster frequency, while uncovering vulnerabilities with GenAI models and their bedrock infrastructure. Emancipating enterprises from the fallacy of security necessitates a continuing calibration of an organization’s zero-trust architecture. As cybersecurity leaders welcome zero trust as a journey, not a destination, and empower their teams with GenAI tools, they’ll be better equipped to outfox the fox watching the hen house.