According to a recent report, corporate risk & fraud professionals are approaching generative AI more proactively than their professional peers, but they’re also conducting training and putting policies in place to ensure GenAI is used ethically

By the very nature of the profession, corporate risk & fraud professionals could be seen as averse to change or new ways of working. However, due to the increasingly complex nature of today’s risks and the need for rapid reaction times, these professionals have shown a willingness to focus on utilizing new technologies to better keep pace with bad actors.

According to a new report, that focus extends to generative artificial intelligence (GenAI). Indeed, corporate risk & fraud departments are ahead of other professional services such as legal and tax in embracing GenAI, according to the Thomson Reuters Institute’s 2024 Generative AI in Professional Services report. Not only are corporate risk departments adopting GenAI at a higher rate and feel more positive sentiments towards its ultimate integration into the enterprise, they also are taking the lead in providing education and policy guidelines around GenAI use.

At the same time, their response towards GenAI is not an unconditional embrace of the technology, but rather an understanding that its use and implementation will need to be monitored. Risk & fraud respondents to the survey by and large said they understood that GenAI will undoubtedly have an impact on their daily workflows, but the technology needs to be implemented with care.

“Generative AI will be a game changer as the internet was,” said one Australian corporate risk director. “There are exciting possibilities as this is developed and will certainly change the way we work. Lots of potential gains and abuses of course.”

Higher usage and positive opinions

Although GenAI has only been in the public consciousness for about a year and a half, corporate risk & fraud professionals have already begun investigating how the tool works – both for their own work, as well as how the technology could function for bad actors.

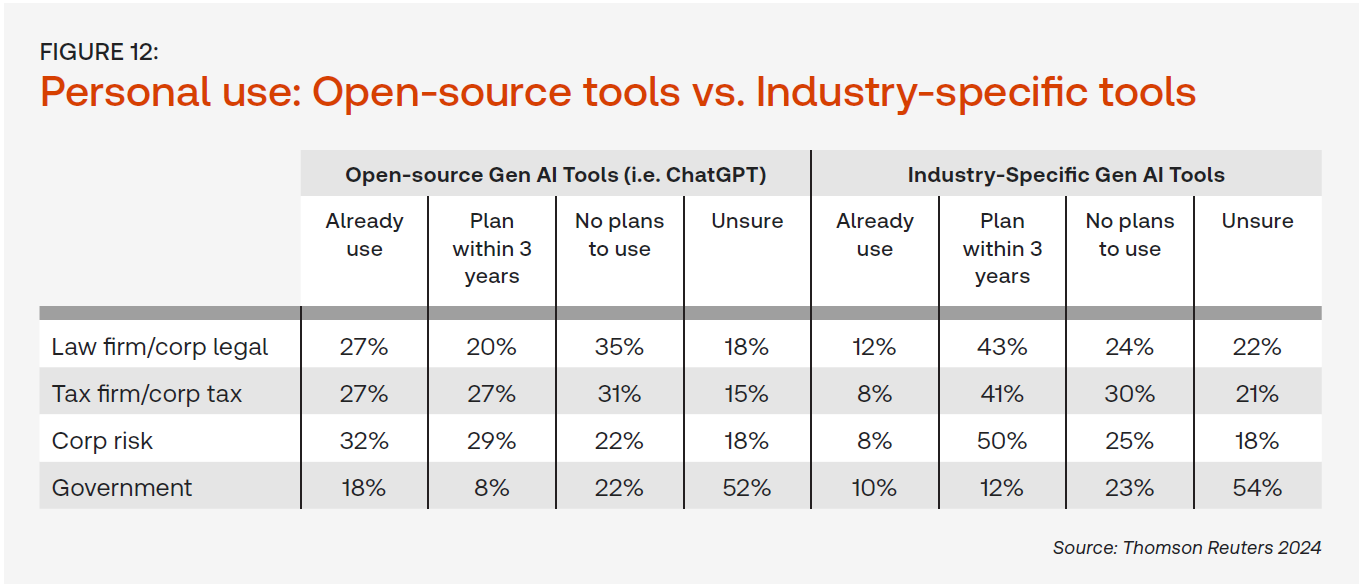

Nearly one-third (32%) of risk & fraud professionals said they have used public-facing GenAI tools such as ChatGPT — the highest rate of usage of any industry measured within the report. An additional 29% said they plan to use these tools within the next three years, while just 22% said they had no plans to use public GenAI tools. (The remainder said they were unsure).

Currently, adoption of GenAI tools specifically made for risk & fraud work is not as high, possibly due to these tools being more recent to the marketplace. Just 8% of corporate risk professionals said they currently use paid corporate risk software or online solutions that incorporate GenAI technology. However, this figure could also rapidly increase soon — an additional 14% said they plan to adopt these tools within the next year, while another 36% plan to do so within the next three years.

Further, respondents said they saw a variety of risk & fraud use cases for GenAI, with more than half of current GenAI users pointing to risk assessment and reporting; document review; document summarization; and knowledge management as the primary ways they are using the technology. A number of respondents also saw a number of industry-specific uses for GenAI tools.

“My job primarily looks for characteristics of bad actors in the payments industry,” said one U.S. corporate risk manager. “I believe we can train generative AI to weed these out much more efficiently than the current third-party software and manual processes that we currently utilize.”

An Australian corporate risk analyst also explained that his team was excited by GenAI’s potential because “as a forensic planner dealing with primary EOT (extension of time) claims worth millions of dollars in the mining and construction space, we have to review thousands of lines of schedules over generally long time periods to calculate the cause of delay.”

For more of the impact of GenAI on professional services, you can download the Thomson Reuters Institute’s 2024 Generative AI in Professional Services report here.

Indeed, corporate risk respondents tended to exhibit more positive feelings about GenAI overall as well. When asked their primary sentiment towards the technology, 30% of corporate risk respondents said they were excited — a higher portion than any other industry and above the 21% overall average. Just 16% of corporate risk & fraud respondents, meanwhile, said they were concerned or fearful of the technology, slightly below the 18% average among all respondents.

Many corporate risk respondents said they had positive emotions due to the productivity gains that GenAI could potentially bring, especially around its ability to automate routine work. However, some said they expect GenAI to help with broader industry pain points, such as career development, as well.

“In an industry challenged with [a declining number of] professionals, we need to leverage technology to offset the losses and augment the creative workflows,” said one US-based risk department executive.

The need for ethical use

Even with early enthusiasm for GenAI tools, however, corporate risk & fraud professionals indicated that these tools will only catch on within the profession if they are used responsibly and ethically.

While many respondents to the survey shared similar concerns to the wider professional services market around the technology itself — worries over accuracy, privacy, and security all sat near the top of GenAI concerns — corporate risk respondents in particular expressed societal concerns around GenAI usage. For example, 70% of corporate risk respondents said complying with relevant laws and regulations would be a barrier to adopting GenAI, a higher percentage than any other type of respondent. Meanwhile, 63% of risk respondents said another barrier would be ensuring that Gen AI tools are used ethically and responsibly, more concern on this than either corporate legal (60%) or corporate tax (49%) respondents. “The industry has a lack of control mechanisms in place, and governments and large corporates have little or no understanding,” said one Australian corporate risk director.

Another Canadian corporate risk executive director noted: “As with anything internet-related, while there are positive elements which advance productivity, efficiency, etc., there are bad actors who will use it nefariously.”

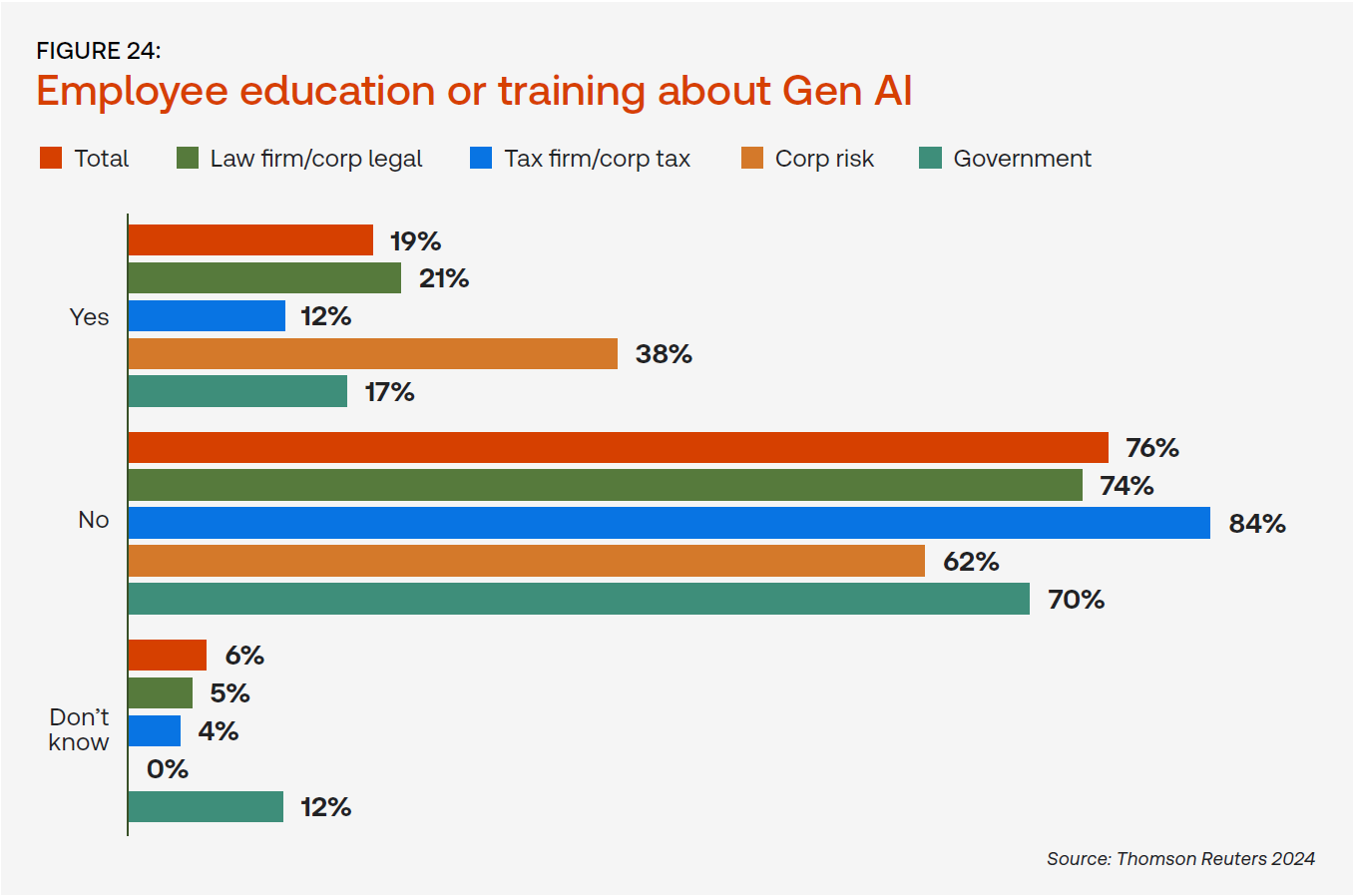

These concerns are perhaps why risk & fraud departments are taking education around GenAI more seriously than those in other professions, according to the survey. In fact, far more corporate risk respondents (38%) said their organizations are providing training and education around GenAI than any other type of professional surveyed, including corporate legal (25%) or corporate tax (10%) respondents.

“Humans will likely always be required to verify,” said one New Zealand investigator, adding that GenAI can minimize the level of human decisions required to create documents or risk profiles. “AI gives the opportunity to research controls, legal precedent, legislation, etc., which humans may miss with a manual search. This will naturally need to be sense-checked but provides the groundwork to start.”

This focus also is reflected in the number of corporate risk professionals reporting that their organizations have enacted GenAI policies. More than one quarter (27%) of corporate risk professionals said their departments or organizations have a policy distinctly covering AI and data, while an additional 19% said GenAI was covered under a broader technology policy. For the general population of professionals, those figures were just 12% and 11%, respectively.

Today’s risk & fraud professionals are tackling risks that are only increasing in complexity, requiring more knowledge and technological competence than ever before. Ultimately, however, it’s clear from the high rate of GenAI adoption and focus on policies and training that corporate risk departments see a near future in which GenAI isn’t just helpful, it’s essential.